A developer's guide: Securing cloud-native applications in Kubernetes

Developers of cloud-native applications that run in Kubernetes are increasingly responsible for operational tasks beyond managing the application code itself. They define infrastructure as code (IaC), container images, pod configuration for Kubernetes deployment, Kubernetes RBAC, network services enabling pod communication, and more.

As developers are becoming more and more responsible for addressing security issues, they must understand the security implications of every artifact they create, both at each individual layer and as a system of connected layers. The highly dynamic nature of Kubernetes makes it complex and difficult to secure. Security mistakes or misconfigurations are common due to a lack of Kubernetes knowledge or from shortcuts developers take to build and deploy applications faster, including:

- Using bloated base images that contain numerous vulnerabilities

- Requesting host access to use the node file system as a temporary disk

- Running as root to expose privileged ports or access the node

- Using over-permissioned Kubernetes roles or cluster roles because RBAC is hard

- Unrestricted network access to cloud resources such as managed databases

The combination of these and other mistakes can result in a compromised library that could give an attacker access to a container, node, cluster, and the most sensitive cloud resources.

Traditional AppSec tools, such as static application security testing and software composition analysis, focus on the application code created by a developer, often including its many dependencies. However, these tools ignore almost everything else. Securing cloud-native applications requires security guardrails, and continuous monitoring across the entire application lifecycle, from source code and Kubernetes artifacts to applications running in production.

What’s required at each stage?

To illustrate how this effectively works, we’ll unpack a simple example of a job that runs for a few minutes per day to process information from a database, generates a report, and uploads it to a private S3 bucket. To accomplish this, a developer must do the following:

- Develop any required custom application code

- Define the list of open-source dependencies used by the application

- Create a Dockerfile to build a container image for the application and its required components

- Write YAML files that define the workload deployed to Kubernetes

- Define a service account and role required to obtain the database credentials from Kubernetes

Effective cloud-native application security requires checking each of these artifacts at different points to ensure that they weren’t accidentally overlooked or purposefully bypassed – such as a pull request that wasn’t checked, or an application that skipped a normal CI process. Each stage of the application lifecycle also introduces new security variables and parameters that must be checked or observed before deploying to production. For example, during CI, a Dockerfile gets built into a Docker image that includes an OS and many software packages.

Code and IaC scanning

All code, whether application code or configuration code, created by a developer must be validated as it is checked in or committed to a project:

- Static application security testing of custom code is required to detect common vulnerability patterns such as the Open Worldwide Application Security Project (OWASP) Top 10

- Scanning Dockerfile, YAML, Helm, and Terraform (IaC) files are required to identify misconfigurations or risky settings, such as those defined by CIS Benchmarks for Docker or Kubernetes

- Vulnerability management and software composition analysis (SCA) are required for checking application code and its OSS dependencies for known vulnerabilities (CVEs) associated with the National Vulnerability Database (NVD)

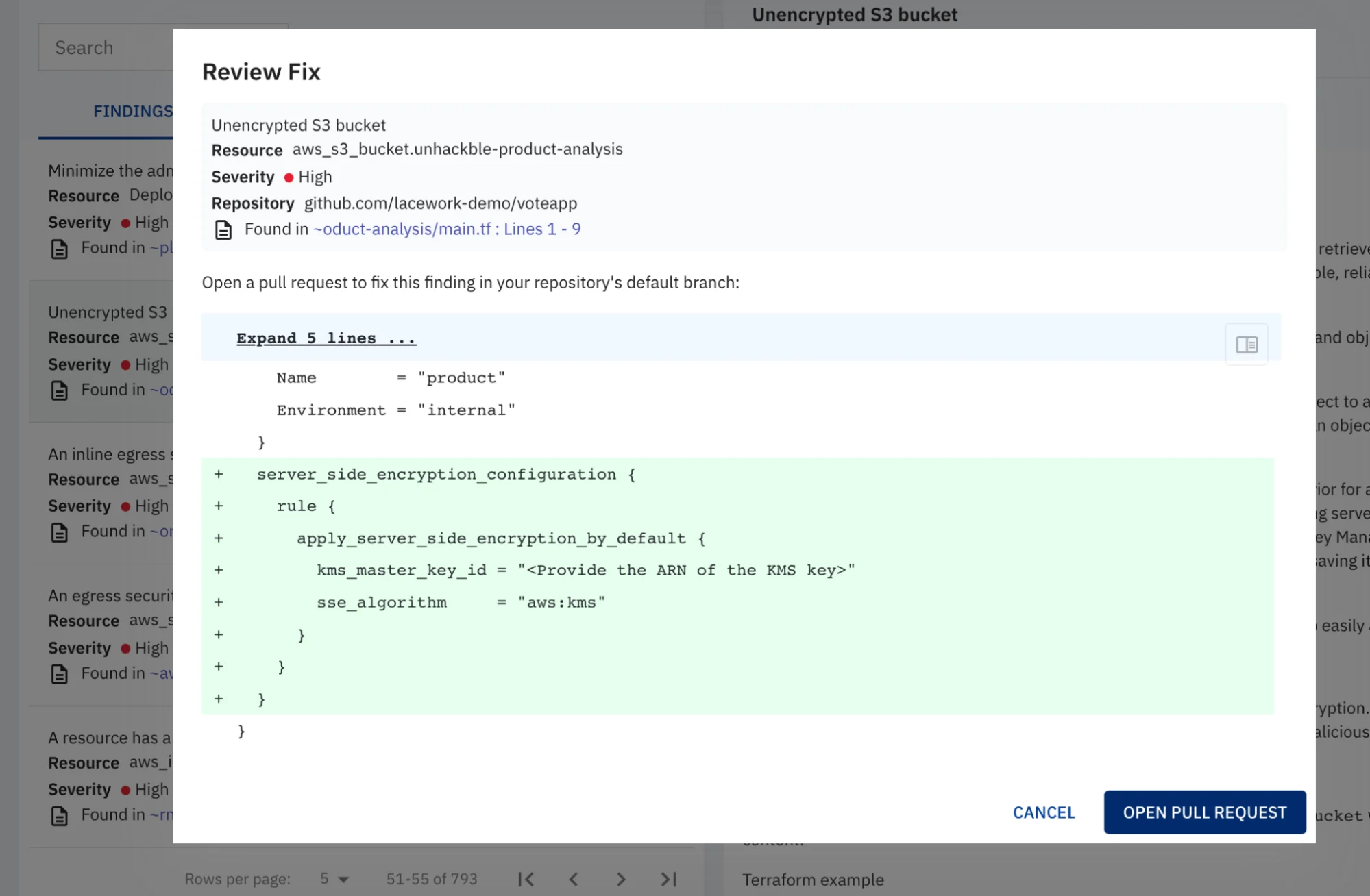

Vulnerable S3 configuration detected in Terraform file

The above are usually accomplished by integrating security tools into a code repository’s pull request (PR) process, like for GitHub or GitLab. Security and compliance violations can trigger a rejection of the code change or require additional reviews.

Continuous integration security

After the code is checked in, it can be built and tested through a continuous integration (CI) service such as GitHub Actions and Jenkins. For our example application, this will build the container image that includes a base image (Alpine, Ubuntu, etc.) and its packages, the custom application and its dependencies, and any other necessary packages or binaries.

The CI process should include a set of security checks on the source code and on the compiled application:

- Dynamic application security testing (DAST) examines applications while they are running, to determine whether they are vulnerable to certain attack techniques.

- Analysis of all the Kubernetes artifacts is required to show additional connections between roles, role bindings, and other Kubernetes policies.

- Scanning all packages contained in the container image will identify known vulnerabilities that should be remediated.

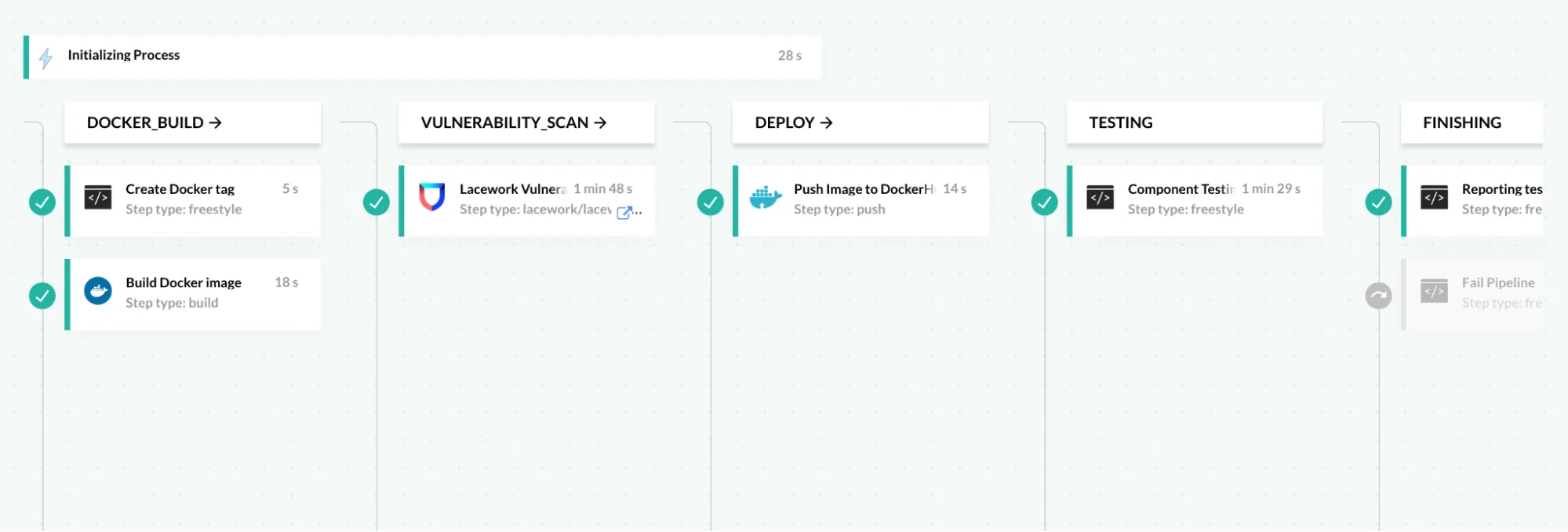

CI security includes scanning images for vulnerabilities

Any critical security issue identified within CI should prevent the process from continuing to ensure the application does not move to the next stage, or trigger a further review for manual override and approval.

Continuous deployment as a last check

Many organizations use an additional continuous deployment pipeline, or deploy new cloud-native applications into production. The CD process provides a final opportunity to validate that our example application meets all requisite security and compliance policies before it’s deployed.

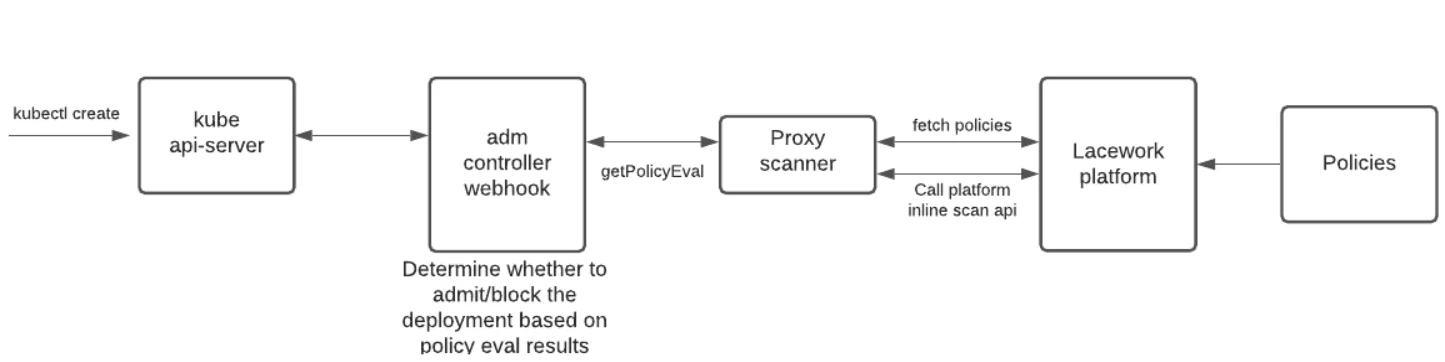

Just before the application is deployed, Kubernetes offers another set of guardrails with the Kubernetes admission control. This control point will validate any unauthorized changes or applications that may have bypassed the approved CI pipeline. Kubernetes can pass the definition of all new resources (policies, pods, RBAC, etc.) to a service that checks each configuration individually. Misconfigurations or overly permissive access can be blocked, at this final stage of the application lifecycle before deployment.

Admission controller blocks vulnerable images just before deployment

Companies that are not yet ready to deploy an admission controller can identify critical risks by using the same policies against Kubernetes audit logs. Monitoring the audit logs does not require deploying containers for managed Kubernetes (EKS, GKE, AKS, etc.). And while it doesn’t enable the blocking of resources, it does provide in-depth visibility into risks that may require immediate attention.

Continuous monitoring at runtime

Although our example application has been successfully deployed, it is no time to let our guard down. The Cloud Native Computing Foundation (CNCF) reminds us to “consider all microservices vulnerable — and monitor their behavior.” While hardening applications is an essential best practice, it cannot guarantee that they are 100 percent safe from attackers.

Vulnerabilities in existing packages and libraries can be discovered after the image has been tested and deployed. Flaws within complex code, that has many transient dependencies, may go undetected. Known security issues, such as vulnerabilities without a fix, may have been flagged but not addressed. And unknown or zero-day vulnerabilities go undetected since there may not be a mechanism in place to discover them.

Any new application behaviors should be continuously monitored and analyzed, such as connections to domains, new processes, abruptly writing to files, or executing new binaries, as these may all be signs of a compromise. A best practice for effectively monitoring hundreds or thousands of applications is to automatically baseline their normal behavior and detect when abnormal or unusual changes occur. This way, you will be aware of the change no matter how subtle or innocuous it is.

Many new Kubernetes users solely rely on Kubernetes security posture management (KSPM) tools for risk visibility. While KSPM certainly plays a role within a layered approach to Kubernetes security, it ignores application behavior, and therefore cannot detect in-progress threats, like an attacker who is using compromised credentials to move laterally. And since they rely on periodic “snapshots,” they can miss applications or services that have very short lifespans, and transient roles that are created for a particular instance and then deleted.

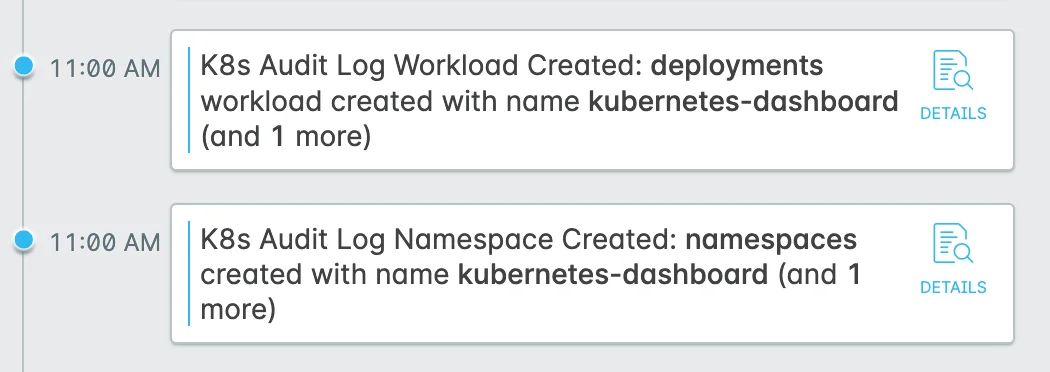

Effectively securing Kubernetes requires continuous assessment of Kubernetes audit logs, as they contain granular details about every resource created, including those that are highly ephemeral. Audit logs also provide visibility into API calls initiated by any pod to access Kubernetes secrets, create other pods, login into containers, and more.

Detection of the Kubernetes dashboard installation in a cluster

Just as developers are increasingly responsible for operational tasks beyond the scope of managing the application code itself, application security must also break out of its box, and be addressed as a continuous and connected set of automated best practices that span the entire cloud-native lifecycle.

To learn more about how Lacework helps you take a lifecycle approach to securing containers and Kubernetes, check out our ebook: 10 security best practices for Kubernetes.

Suggested for you